Random Forest • XGBoost / LightGBM / CatBoost — PDP, ICE, SHAP, Tuning

I implement end-to-end tree-ensemble pipelines in R for classification and regression, with strong diagnostics and clear model explanations. I work fluently with randomForest/ranger, xgboost, lightgbm, and catboost, wrapped in tidymodels/caret or mlr3 for clean resampling and reproducibility.

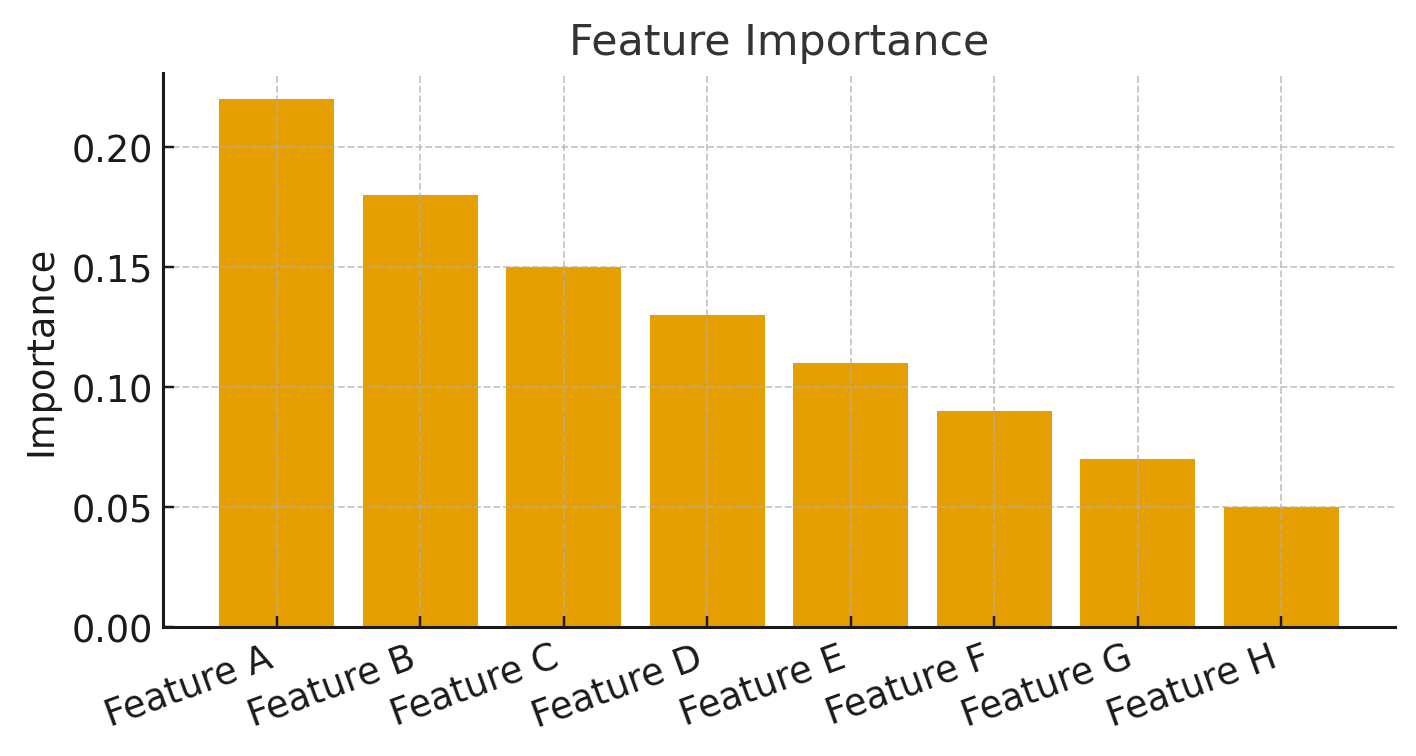

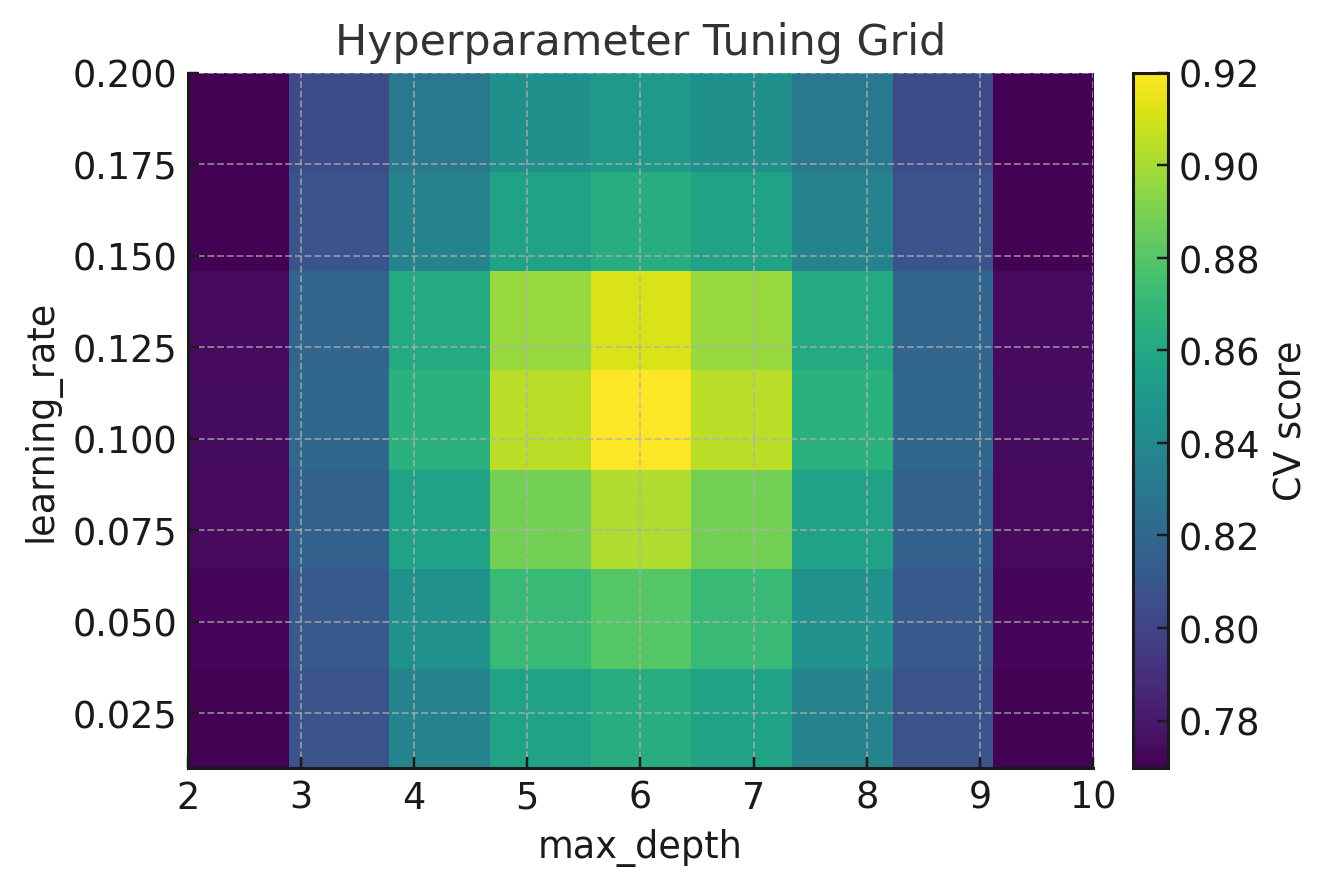

Modelling & tuning: grid/random/Bayesian search; cross-validation, repeated CV, time-series CV; early stopping; class-imbalance handling (weights/SMOTE), monotonic constraints (where supported), categorical encodings (one-hot/target/ordered), missing-value strategies, and leakage-safe preprocessing pipelines. I provide feature importance (gain/cover/permutation), error curves, and calibration.

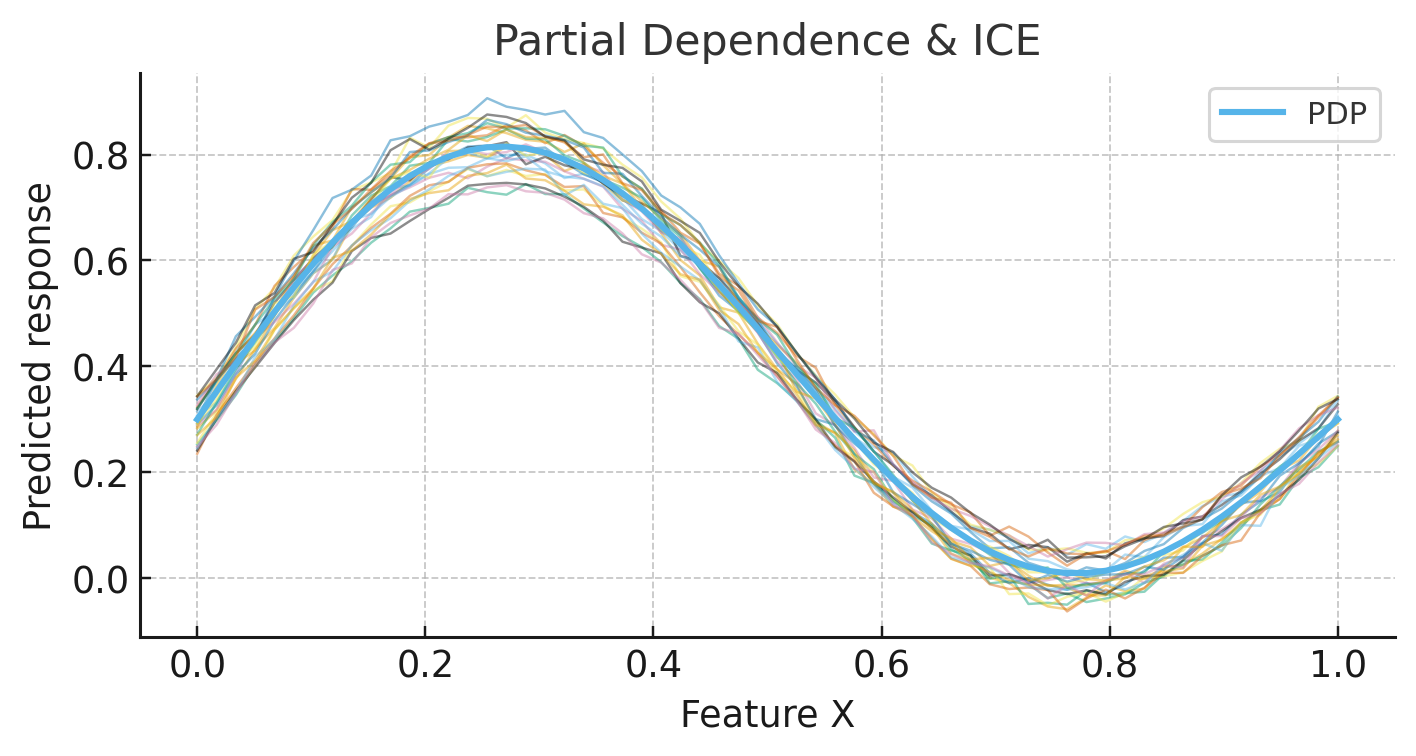

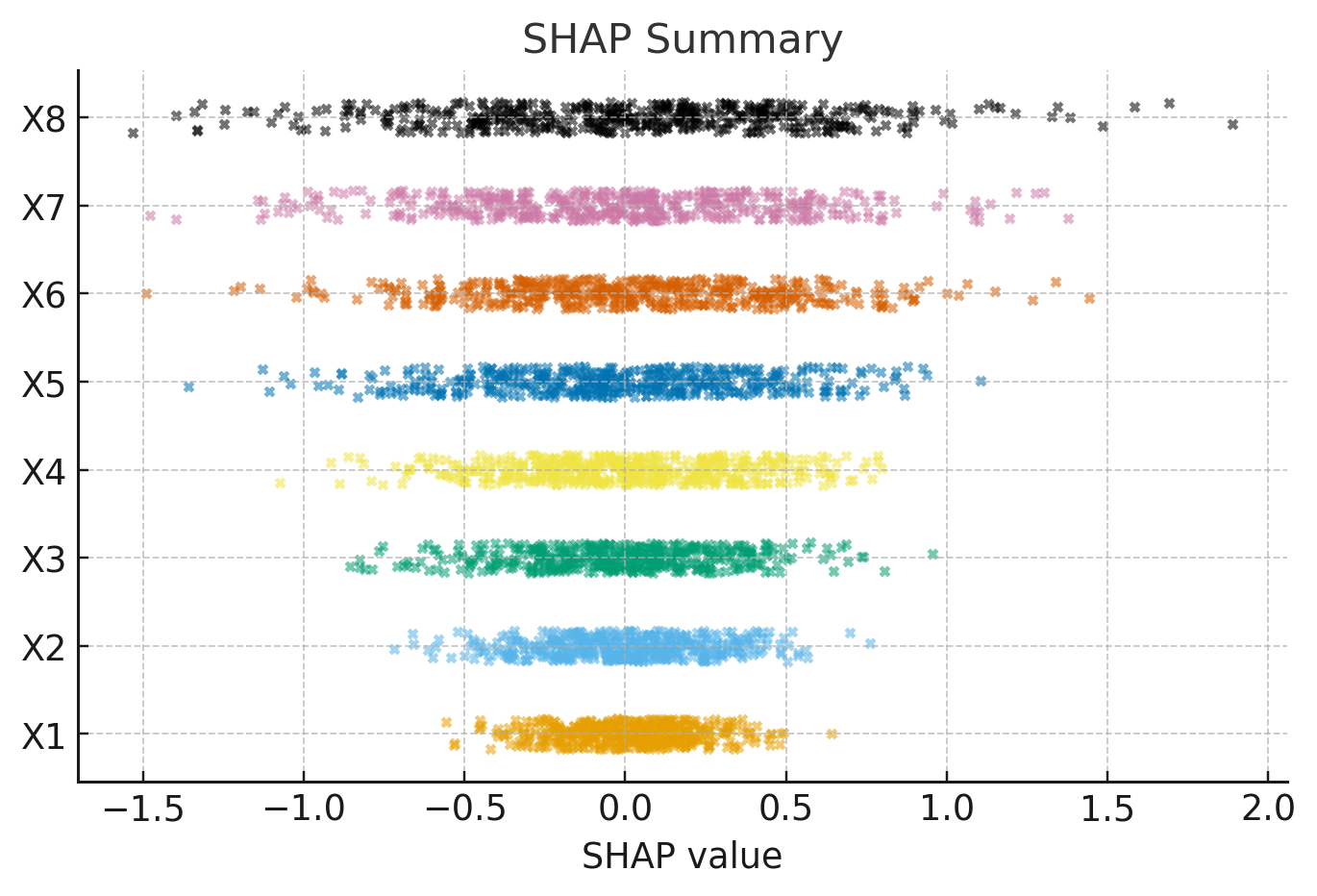

Explainability: PDP/ICE for main effects and interactions, ALE as an alternative to PDP, and SHAP for global and local attributions (summary, dependence, force plots). I can deliver compact narratives that connect model behavior to domain intuition and robustness checks.

Evaluation: accuracy/ROC-AUC/PR-AUC/logloss for classification; RMSE/MAE/R² for regression; threshold analysis, decision curves, and fairness slices as needed. Deliverables include clean R scripts, tuned models, and publication-quality figures ready for reports or theses.

I’m very familiar with these methods and can provide hands-on R programming help end-to-end—data prep, modelling, tuning, interpretation, and deployment-oriented code.

Get help: engagements start at USD $150; fixed quotes follow a brief review of your data and scope.